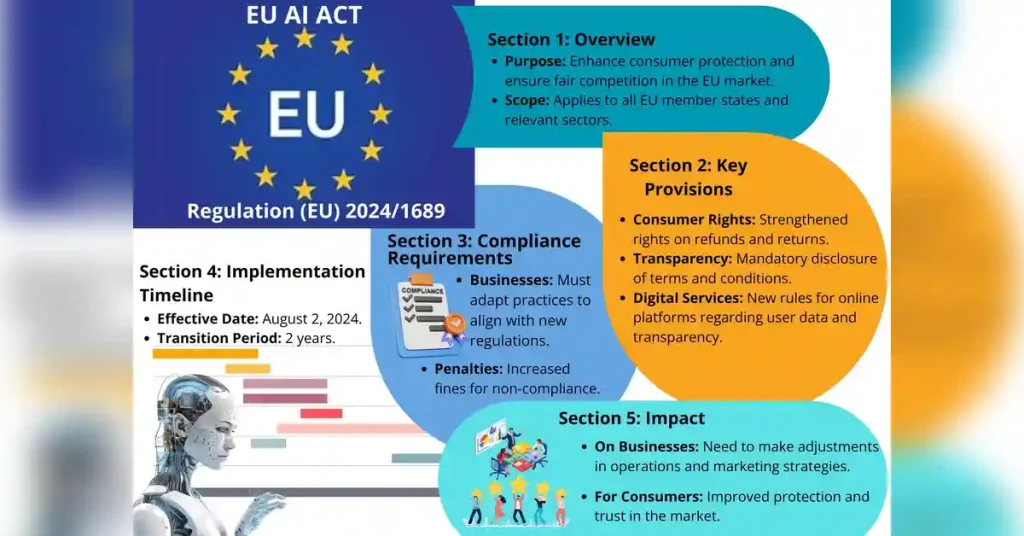

EU AI Act news has become a focal point for businesses, developers, regulators, and policymakers as Europe moves closer to fully enforcing the world’s first comprehensive artificial intelligence law. What began as a regulatory proposal has now matured into a legally binding framework that will influence how AI systems are built, deployed, and governed across multiple industries. With implementation phases approaching, attention has shifted from theoretical debate to practical impact.

One reason interest in EU AI Act latest updates continues to grow is the law’s risk-based structure. Instead of treating all AI systems equally, the regulation classifies them based on potential harm to individuals and society. This approach places stricter obligations on high-risk applications such as hiring tools, credit assessment systems, and biometric technologies, while allowing lower-risk AI to operate with minimal interference. As a result, EU AI Act regulation news is especially relevant for organizations trying to determine where their technology fits within this framework.

The global reach of the EU artificial intelligence law has further amplified its significance. The regulation applies not only to companies based in the European Union but also to any organization whose AI systems affect people within EU borders. This means that non-EU companies must also monitor EU AI Act news closely, as compliance obligations can apply regardless of physical location. Similar to the impact of GDPR, the AI Act is already shaping internal policies and product decisions worldwide.

Another major driver behind ongoing EU AI Act latest updates is uncertainty around compliance expectations. While the law establishes high-level principles, regulators are continuing to release guidance on documentation, transparency, data governance, and human oversight. For many companies, understanding AI Act compliance requirements now requires coordination between legal, technical, and operational teams. Compliance is no longer a future consideration; it is becoming a current strategic priority.

Developments in EU AI Act regulation news also reflect broader debates about innovation and competitiveness. Supporters argue that clear rules will increase trust in artificial intelligence and encourage responsible adoption. Critics, however, raise concerns about regulatory burden, especially for startups and smaller companies. These tensions are shaping how the EU artificial intelligence law is interpreted, enforced, and potentially refined over time.

As enforcement timelines draw closer, EU AI Act news has become more concrete and less speculative. Recent updates have clarified penalty structures, supervisory authorities, and phased implementation schedules. Each clarification helps organizations better understand their obligations under the evolving AI Act compliance requirements, while reducing uncertainty around enforcement risks.

In the sections ahead, this article examines the most important EU AI Act latest updates, explains how the regulation works in practice, and explores what the law means for businesses, developers, and the future of artificial intelligence governance in Europe.

The European Union’s approach to artificial intelligence regulation has reached a decisive phase. What began as a policy discussion about managing emerging technologies has now evolved into one of the most comprehensive AI regulatory frameworks in the world. As lawmakers finalize implementation details, interest in EU AI Act news continues to surge—particularly among businesses, developers, and policymakers trying to understand what this law actually means in practice.

This article breaks down the most recent developments, explains the structure of the EU AI Act, and outlines how its enforcement will reshape the AI landscape in Europe and beyond.

What Is the EU AI Act?

The EU AI Act is the world’s first broad, binding legal framework designed specifically to regulate artificial intelligence systems. Its core objective is to ensure that AI technologies used within the European Union are safe, transparent, and aligned with fundamental rights, while still encouraging innovation.

Unlike sector-specific regulations, the AI Act applies across industries. Any organization that develops, deploys, or markets AI systems in the EU—or whose AI outputs affect people in the EU—falls within its scope.

The law introduces a risk-based approach, meaning not all AI systems are treated equally. Instead, regulatory obligations scale based on how much potential harm an AI system could cause.

Table of Contents

Latest EU AI Act News and Regulatory Updates

Recent EU AI Act news has focused less on whether the law will exist and more on how it will be applied and enforced. The legislation itself is largely settled, but key updates have clarified timelines, enforcement mechanisms, and practical compliance expectations.

Among the most important developments:

- Confirmation of phased enforcement, allowing businesses time to adapt

- Additional guidance on general-purpose AI models, including large language models

- Clarification on penalty thresholds and supervisory authority roles

- Ongoing work on technical standards that will define compliance benchmarks

These updates signal that regulators are shifting from policy design to real-world implementation. For companies working with AI, preparation is no longer optional—it is operationally necessary.

Understanding the Risk-Based Classification System

At the heart of the EU AI Act is its tiered risk framework. This structure determines which systems are banned, which are heavily regulated, and which face minimal oversight.

1. Unacceptable Risk AI (Banned)

AI systems that pose a clear threat to fundamental rights are prohibited outright. These include technologies that enable:

- Social scoring by governments

- Certain forms of biometric surveillance

- Manipulative AI that exploits vulnerable groups

Once enforcement begins, these systems must be withdrawn from the EU market.

2. High-Risk AI Systems

High-risk systems are allowed but subject to strict obligations. They commonly appear in areas such as:

- Recruitment and employment screening

- Credit scoring and financial decision-making

- Medical devices and health diagnostics

- Law enforcement and border control

Organizations using high-risk AI must meet extensive compliance requirements, including documentation, risk management, and human oversight.

3. Limited-Risk AI

These systems face transparency obligations, not bans. For example, users must be informed when they are interacting with an AI system such as a chatbot or AI-generated media.

4. Minimal-Risk AI

Most everyday AI tools—recommendation engines, photo enhancement apps, spam filters—fall into this category and remain largely unregulated.

EU AI Act Compliance Requirements Explained

Compliance under the EU AI Act is not a single checkbox. It is an ongoing governance process, particularly for high-risk AI systems.

Key obligations include:

Risk Management and Documentation

Organizations must identify foreseeable risks, test AI systems accordingly, and maintain detailed technical documentation.

High-Quality Data Governance

Training data must be relevant, representative, and free from systemic bias wherever possible.

Human Oversight

AI systems cannot operate in isolation. Clear procedures must allow human intervention or override when necessary.

Transparency and User Information

Users must understand how and when AI is being used, especially in decision-making contexts.

Post-Market Monitoring

Once deployed, AI systems must be continuously monitored for emerging risks and performance issues.

These requirements place AI governance on a similar level of importance as cybersecurity or data protection.

EU AI Act Timeline and Enforcement Schedule

One of the most common questions in EU AI Act news coverage concerns timing. While exact dates depend on secondary legislation, the overall structure is clear.

Key Phases

- Formal adoption: Completed

- Grace period: Organizations are given time to align systems

- Banned practices enforcement: Comes first

- High-risk system obligations: Apply later, after standards are finalized

This staged rollout is designed to prevent disruption while still ensuring accountability.

Impact on Businesses, Startups, and Developers

The EU AI Act’s influence extends far beyond Europe. Because it applies to any AI system affecting EU residents, global companies must also comply.

Large Enterprises

Established firms will need to integrate AI compliance into existing risk and legal frameworks. For many, this resembles GDPR-style governance, but with deeper technical oversight.

Startups and SMEs

Smaller companies face higher proportional burdens, but regulatory sandboxes and innovation support measures are intended to reduce friction.

AI Developers and Model Providers

Model transparency, training data disclosure, and usage limitations are becoming central concerns—especially for general-purpose AI.

In practice, AI compliance is rapidly becoming a competitive differentiator rather than a mere legal obligation.

Penalties and Enforcement Mechanisms

The EU AI Act includes some of the most significant penalties ever associated with technology regulation.

Fines can reach:

- Up to €35 million, or

- A percentage of global annual turnover, depending on the violation

Enforcement will be handled by national authorities, coordinated at the EU level to ensure consistency. Regulators have emphasized that enforcement will focus on risk severity and intent, not punishment for its own sake.

How the EU AI Act Shapes Global AI Regulation

Another recurring theme in EU AI Act news is its global ripple effect. Similar to GDPR, the AI Act is already influencing policy discussions in other jurisdictions.

Countries and regions are watching closely to see:

- How risk classification works in practice

- Whether innovation slows or adapts

- How cross-border enforcement is handled

For multinational companies, aligning with EU standards early may simplify compliance elsewhere in the future.

What to Expect Next

Looking ahead, several developments are likely:

- Publication of detailed technical standards

- Expansion of AI regulatory sandboxes

- Increased enforcement activity and precedent-setting cases

- Further clarification on general-purpose AI obligations

The EU AI Act is not static. It is a living framework that will evolve alongside the technology it regulates.

Frequently Asked Questions

Is generative AI affected by the EU AI Act?

Yes. Depending on how it is used, generative AI may fall under transparency or high-risk requirements.

Does the law apply to non-EU companies?

Yes. Any AI system that impacts individuals in the EU must comply.

When will penalties start being enforced?

Banned practices are enforced first, followed by high-risk obligations after the grace period.

Final Thoughts

The surge in EU AI Act news reflects a broader shift in how societies govern artificial intelligence. Regulation is no longer theoretical—it is operational. For businesses and developers, the most important step now is understanding where their systems fall within the risk framework and preparing accordingly.

Those who adapt early will not only reduce legal exposure but also build trust in a market where responsible AI use is becoming a baseline expectation.